I made a bad decision to try and make this piece of art, in one form or another, 18 or so years ago. I think that my unconscious was programmed at an early age by basic cable and a bunch of Hanna-Barbera cartoons that came on really early in the morning on Cartoon Network, before school (especially The New Scooby-Doo Movies with Sonny & Cher, Mama Cass, the Harlem Globetrotters, and so on), and maybe also The Brady Bunch on Nick at Nite? I mean, Middle-earth is great and all, but the fictitious universe that I’d like to explore is the sort of accidental techno-utopia that might be called ‘Mr. Magoo universe,’ where everything really is happy-go-lucky, because the federal government can deflect asteroids with nuclear explosives, the air and water are disgustingly clean, cities are car-free Georgist paradises with fare-free public transport, and you can drive a fuel cell vehicle from coast to coast on a single tank of anhydrous ammonia while emitting only electron-beam-crosslinked natural rubber tire wear particles. That way, when people get hammered on the American Bicentennial, they’ve really got something to celebrate: the accomplishment of decarbonization going almost completely unnoticed. It was about as close to a quick, seamless infrastructure hot-swap as it gets. I also watched a lot of the History Channel as a kid, and as much as it pains me to say this, World War II shook out a little bit differently, and long story short, the Cold War is between the United States and Wang Jingwei’s Nationalist China (at least until he dies in 1970). The point is that it’s a purely nationalistic conflict with no meaningful economic dimension, mercifully lifting the deliriant fogs of ‘capitalism’ and ‘communism’ from the American public consciousness. These people can also think more clearly because of the lower environmental levels of lead, because high-octane coal-derived BTX and agave-derived semi-cellulosic ethanol were cost-competitive with gasoline from the 1920s on. It goes on and on, it’s ridiculous, and the book or whatever is just this test function that probes this model universe, and just so happens to start in Waukegan, Illinois in 1976.

There’s no good point of entry. Here, then, are two dreams I had, a screed, descriptions of some of the inanimate objects in the book, and some mood boards.

There’s nothing I love more than taking a bunch of melatonin and being suddenly awoken at ten in the morning, with the ‘save state’ of a weird dream still intact in my mind. I had two these dreams that, of course, didn’t take place in the world of the novel, but felt as though they took place in a ‘nearby’ universe. In particular, they took place in the ‘past,’ before I was born.

The Train (2011)

I woke up inside this passenger railroad car, which seemed to have the spartan interior of a school bus. I couldn’t make anything out through the windows, in the predawn darkness. I stood up and looked around, seeing maybe three or four other people. It occurred to me that we were all in high school. I looked down at my body, and found myself wearing a beige unitard, the color of a prosthetic limb or a crash test dummy, and numbered by a little patch on the breast. I pinched the fabric, and indeed, it was spandex.

The first light of dawn had only just appeared when the train arrived at the school. The tracks went straight into the building, through an opening in the side. The train was repeatedly X-rayed as it crept inside, and after a few minutes it came to a full stop. We disembarked in the middle of a busy hallway, full of students milling about, but also these taller, adult technicians in clean room suits. Along each wall, it would alternate between, on the one hand, rows of split pea green lockers and doors to classrooms, and on the other hand, thick red-orange windows to hot cells.

As all of these students were getting books and such for first period, the technicians were using remote manipulators to handle radioactive materials. They were laser-focused on their work, seemingly unaware of all the chatter and slamming shut of locker doors, but also the deafening noise of the still-running diesel-electric locomotive, towering above the crowd. You could feel it inside your head and chest.

Whatever my first-period class was, it was in a windowless room. There were tiles on the floor (some of their corners were chipped), cinder block walls with thick white paint, and all the usual ceiling tiles and fluorescent lamps up above. There was a TV up in the corner of the room, by the teacher’s desk, and you could hear the relay click as it was turned on remotely for the morning announcements. We stood up, sang the national anthem of the Canadian Soviet Socialist Republic, and sat back down.

The television presenter looked kind of like Leonard Nimoy. He was lanky and gaunt, with a widow’s peak, and enormous aviator glasses. The news was all about how the men’s hockey team defeated Sweden in overtime at May Day Stadium (in Ottawa), and how the State Committee for Statistics had announced that oil production in the Athabasca was up six percent, year to date. “The tar will provide for you, Young Pioneers. The tar will provide.” Just seconds after he signed off, there was a piercing and shrill alarm. The teacher told us that there had been an accident, and to line up single file for evacuation. I could hear the feet of the desks scraping across the floor as we all stood up. The dream blacked out.

I woke up trapped inside a hollow fiberglass tree. I was bent over, with my legs inside the trunk, and my upper body inside a branch. There was an acrylic glass bubble on the underside of this branch, right around my face, and I could see out. The acrylic was scratched, and my breath was fogging up the window, so I could just barely make out the scene outside. It was this enormous shortgrass plain, stretching out in all directions, to a horizon obscured by fog. It was a bit like the original version of gm_flatgrass from Garry’s Mod, but with just enough terrain variation that it was out of the uncanny valley, looking like somewhere in the Great Plains. There were these bolt-straight train tracks, and on the other side, a brown horse grazing serenely.

My arms were pinned up against my torso, and I writhed to see if there was any way to move through the tree, or break out of it. It was futile, but at least I wasn’t being asphyxiated. The sound of my breath was tinny, reverberating inside the leafless plastic tree. The brown and green colors of the scene were muted and flattened by the oppressive blanket of clouds overhead, and the wet gray fog. The fog seemed to almost damp sound as well, such was the stillness and silence. At some point, I saw something glinting on one of the rails, and realized that there was a derail device only tens of feet from the tree.

That’s when I heard the train horn, and became filled with dread. I could both hear and feel the train coming. There were several seconds between when the locomotive derailed, and when the dream ended. The horse was completely unaffected, though, and continued to graze. It didn’t even flinch as the train folded up like an accordion, only ten or so feet away.

The Bomb (2013)

It was pitch black. I stuck my hands out to feel for anything in front of me, and tried to walk in a straight line. I could tell that the ground or floor underneath me was hard. I didn’t think that I was outside, because there were these tinny echoes, the air was still, and it seemed almost dank. Eventually I found a metal wall, and started following it with my hand. After walking for ten minutes or so, I made out a tiny speck of light in the distance, and twenty minutes after that, I could finally see that I was walking inside what seemed to be a giant HVAC duct of some sort, maybe 15 feet tall and 30 feet across. The light was coming from a tee off to the right, and I started to hear voices, and some kind of activity.

I hugged the right side of the duct. As I peered around the corner of the tee, I saw all kinds of people, from all walks of life, working on and around this very long object. There were all these parts lying around, labeled with QR codes. The object looked like some sort of golden multi-cell RF accelerator cavity, maybe a hundred feet long, and with many lobes along its length. It was in some sort of steel cradle or fixture, and there were many wires, cables and boxes attached to it. I didn’t feel threatened, so I appeared around the corner, to see if anyone knew how to get out of there.

I walked up to one of the first people I saw, and asked him what was going on. He explained that it was a Facebook event inside a Major League Baseball stadium, and there was an all-you-can-eat buffet for anyone who helped build this piece of Ikea furniture. This uniformed SS officer came out of nowhere and scared the shit out of me. He had an iPhone, and explained that when you scanned the QR code on a part, it would pull up these Ikea-style assembly instructions. He also pointed out the buffet table, with the sneeze guard, the hotel pans, and everything. It looked good, and I really wanted to grab a plate.

Right as he was holding up an Allen wrench and asking me if I was going to take the deal, I glanced over to the object, and suddenly realized that I was actually looking at the radiation case of a thermonuclear weapon with 40 or so stages, and a yield of more than a gigaton of TNT. I felt this rush of adrenaline, broke out into a cold sweat, and tried with all of my might to relax my face, so I that didn’t even have even one single faint, fleeting micro-expression of distress, because I felt like this guy was going to kill me if he found out that I knew. I told him that, while the offer was tempting, I was about to have severe diarrhea, and needed to leave.

I could tell that he didn’t completely buy my excuse. He sort of tilted his head back and squinted, but luckily for me, in that instant, someone somewhere had some problem, and this Nazi had to quickly attend to it before it cascaded into more problems. He was going to come back to me.

I was panicking, trying get the fuck out of there, and I saw this vent near the foot of the wall, which was remarkable because the floor, walls and ceiling were otherwise featureless. The screws were loose enough that I could get my fingertips underneath the grille, and pry it off the wall. I looked in and saw a room on other side of the wall, and went head-first through this hole. I barely fit, and these raw sheet metal edges scraped across my skin as I wiggled through. I got a bunch of abrasions, but I didn’t see any blood, so there must not have been any burrs or anything.

The other side was this bathroom, covered floor to ceiling in mint green tiles. The door was dark, bookmatched wood, several inches thick. I opened the door, and found myself in a portico, at the front entrance to a downtown hotel. This cream-colored Cord 812S convertible pulled up, and this dude with a stovepipe hat and fur coat got out. He thought I was the valet and threw me his keys, looked me up and down, and told me the car was worth more than my life. I told him that I would try and save both, and tried to get in the car as fast as I could without seeming suspiciously overeager.

Even as I was negotiating that first S-turn to get out onto the street, it was obvious that I was wringing the car’s neck. I wasn’t looking at the guy, so I couldn’t tell if he saw or heard me peel out; I was looking over my left shoulder to see if I had to merge into traffic. There wasn’t any, and as I looked ahead, I was astonished by the lifelessness of this city. The few cars on the six- or eight-lane street were all parallel parked, and there weren’t any pedestrians at all. The city was perfectly grid-planned, and looking between the rows of skyscrapers, I could see this street extend all the way out to a vanishing point on the horizon. I did not hesitate to blow through all the intersections, and the daylight to my left and right seemed to strobe.

At a certain point, the city just ended. The shade of the city was gray-blue, and then this peachy, pink-orange desert world exploded into my field of vision. The street necked down into two-lane, pitch-black, bolt-straight asphalt road on a salt flat. It was bright and my pupils contracted, but it felt cool even with the sunlight on my skin. The air was sort of salty and gritty, and there was this haze or aerosol over the landscape. I saw mountains all along the horizon, and just instinctively knew that they would protect me from the weapon effects. The wind noise was deafening with the top down, and after a minute or so, I looked in the rear-view mirror to find that the city was tiny, almost as tall as it was wide, seemingly in the center of this salt flat, concentric with this circular mountain range.

I drove straight for maybe 15 minutes, at 100 miles an hour or so. The wind altered the shape of my hair, and when I ran my fingers through it, it felt sandy.

The salt flat did end, much to my relief. I then had to navigate this network of valleys, which were low-lying but with a lot of elevation change in and of themselves, almost like rolling hills. I had no idea where I was going, just looking up at the tops of the mountains as reference points, and making educated guesses about which way to turn at each intersection. If you looked at a map of these roads, they would appear jagged and energetic. There was no way to tell in what general direction a road would ultimately take you, because it would run straight as far as you could see, sharply turn in places with no obstacles or landmarks of any sort, and run straight again. The sun was getting low on the horizon, and the mountains began to cast three-dimensional shadows in the haze, with the same blue-gray tinge as the shadows of the skyscrapers.

I kept twisting my head around to look back at the city, and eventually it did disappear behind a mountain slope, to my enormous relief. I knew that I would survive, and lost focus. The rocks had a faint pink cast, with these flowing bands of golden brown color, almost like Jupiter, or half-mixed strawberry banana yogurt. A lot of the rocks had these bowls or dimples on top, which were full of standing water, even though everything else in the desert was utterly desiccated.

By the time the sun set, I had pressed far enough into the mountain range, and a motel appeared. The woman at the front desk seemed to be bored out of her mind, almost catatonic. She barely said anything or made eye contact. I don’t know how I paid for the room. The motel bed was very stiff, elastic, and low to the ground. I turned the little black plastic CRT on, hearing the 15 kilohertz whine and feeling the static electricity near the screen, and watched the baseball game for a bit. There was a sliding glass door to a slab of concrete too small to be described as a patio. It felt good to just slide the door back and forth, with its heft and smooth action. My feet were bare, and I could feel my body heat leak into this cool slab. The mountain ridge was perpendicular to my line of sight, and I somehow knew that the city was directly on the other side.

The sight of this mountain ridge was disturbing. Even the foot of the mountain was impossibly far away, far beyond my ability to tell distance, and yet the mountains then rose so high up that I had to tilt my head up to see the ridge. It was sterile and barren, and felt dangerous, like an oncoming rogue wave. My mind wandered, and I wondered whether I was right to be so alarmed, if I had really seen what I thought I saw, and if I had stolen a car for no reason.

After dusk, it was impossible to tell where the mountains ended and the night sky began. By day, the sky was completely cloudless, and yet by night, it was completely starless. The motel windows only threw light about a hundred feet away, and beyond that was a black void. The ground was this powdery rock with the occasional pebble, almost totally inorganic. The air had actually warmed up. It was comfortable, and perfectly still.

Then the sky lit up. I couldn’t hear anything, not even any normal or pulsatile tinnitus. I had 20/2 vision. The light should have been unbearably painful, and yet it wasn’t. The detail should have been smeared out by bloom, and yet there was none. It was the starkest contrast that I had ever seen, and I could follow every delicate fractal feature of the ridgeline along the boundary of the blackest black and whitest white, scanning it from left to right over what felt like a full minute, but was actually a thousandth of a second. I closed my eyes, and yet I still saw everything as if they were open. The ridgeline was permanently burned into my mind.

From about 1980 to 2020, the transgression of planetary boundaries began in earnest, and the window of opportunity to steer human development in the direction of the “comprehensive technology” and “stabilized world” scenarios from The Limits to Growth closed. The problem was stated best by John von Neumann, in his 1955 paper Can We Survive Technology?

“In the first half of this century the accelerating industrial revolution encountered an absolute limitation — not on technological progress as such but on an essential safety factor. This safety factor, which had permitted the industrial revolution to roll on from the mid-eighteenth to the early twentieth century, was essentially a matter of geographical and political Lebensraum: an ever broader geographical scope for technological activities, combined with an ever broader political integration of the world. Within this expanding framework it was possible to accommodate the major tensions created by technological progress. Now this safety mechanism is being sharply inhibited; literally and figuratively, we are running out of room. At long last, we begin to feel the effects of the finite, actual size of the earth in a critical way. Thus the crisis does not arise from accidental events or human errors. It is inherent in technology’s relation to geography on the one hand and to political organization on the other… The carbon dioxide released into the atmosphere by industry’s burning of coal and oil — more than half of it during the last generation — may have changed the atmosphere’s composition sufficiently to account for a general warming of the world by about one degree Fahrenheit… another fifteen degrees of warming would probably melt the ice of Greenland and Antarctica and produce world-wide tropical to semi-tropical climate… Technology — like science — is neutral all through, providing only means of control applicable to any purpose, indifferent to all… there is in most of these developments a trend toward affecting the earth as a whole, or to be more exact, toward producing effects that can be projected from any one to any other point on the earth. There is an intrinsic conflict with geography — and institutions based thereon — as understood today. Of course, any technology interacts with geography, and each imposes its own geographical rules and modalities. The technology that is now developing and that will dominate the next decades seems to be in total conflict with traditional and, in the main, momentarily still valid, geographical and political units and concepts. This is the maturing crisis of technology. What kind of action does this situation call for? Whatever one feels inclined to do, one decisive trait must be considered: the very techniques that create the dangers and the instabilities are in themselves useful, or closely related to the useful. In fact, the more useful they could be, the more unstabilizing their effects can also be. It is not a particular perverse destructiveness of one particular invention that creates danger. Technological power, technological efficiency as such, is an ambivalent achievement. Its danger is intrinsic. In looking for a solution, it is well to exclude one pseudo-solution at the start. The crisis will not be resolved by inhibiting this or that apparently particularly obnoxious form of technology. For one thing, the parts of technology, as well as of the underlying sciences, are so intertwined that in the long run nothing less than a total elimination of all technological progress would suffice for inhibition. Also, on a more pedestrian and immediate basis, useful and harmful techniques lie everywhere so close together that it is never possible to separate the lions from the lambs… Similarly, a separation into useful and harmful subjects in any technological sphere would probably diffuse into nothing in a decade… Finally and, I believe, most importantly, prohibition of technology (invention and development, which are hardly separable from underlying scientific inquiry), is contrary to the whole ethos of the industrial age. It is irreconcilable with a major mode of intellectuality as our age understands it. It is hard to imagine such a restraint successfully imposed in our civilization. Only if those disasters that we fear had already occurred, only if humanity were already completely disillusioned about technological civilization, could such a step be taken… For progress there is no cure. Any attempt to find automatically safe channels for the present explosive variety of progress must lead to frustration. The only safety possible is relative, and it lies in an intelligent exercise of day-to-day judgment.”

The book is an attempt to fulfill two objectives. First, I want to convey just how contemptible, unforgivable, childish and knowing the failure to kick the “business as usual” habit at any point in the 1980–2020 period was. It would seem that, if humanity were considered as a superorganism, that superorganism would have a severe drug problem and executive functioning deficit. I have some experience in this area, and I should know. It’s not so much that these decisions guaranteed that this or that disaster will happen at some point in the future, it’s that there was a cavalier attitude, power vacuum and abdication of responsibility at the commanding heights of planetary-technological civilization. The transgression of planetary boundaries was downplayed as beyond any reasonable planning horizon, overblown by sadomasochistic Brahmin Left hypocrites, and an issue in human development of no particular importance, which would be handled in due course by unspecified non-coercive, spontaneous self-organization, or perhaps divine intervention. The complexity, abstractness and historical novelty of these problems certainly puts them in a sort of collective blind spot, but the elite must pay its own way by handling precisely these problems. The book is set in 1976–1977 because I want to turn the future-orientation of science fiction on its head as a way of mercilessly, disrespectfully dunking on humanity (and beating a live horse for once). Second, I want to envision a clean break from business as usual, in the full-blown and fully-integrated form of a fictitious universe. The joke is that, although this world is obviously a contrivance, it is what might be called a ‘Mr. Magoo universe,’ in which an exceedingly high level of human development has been attained in a freak historical accident, rather than through “patience, flexibility,” and “intelligence.” As these virtues are virtually non-existent, sustainable development could only ever have happened by accident, and this is non-negotiable for my suspension of disbelief.

It’s safe to say that adulthood involves, among other things, making compromises, coping with grief, and planning ahead. These might be described as the virtues of amenability, equanimity, and foresightedness. In particular, when an adult is confronted with a problem that will only become worse with time, they address it at the earliest opportunity. In my opinion, the unabated continuation of business as usual through the 1980–2020 period speaks to the intellectual and spiritual bankruptcy of the developed world. I understand why feeling the “the effects of the finite, actual size of the earth in a critical way” is depressing to people steeped in “the industrial way of life,” because I feel that too. It is certainly painful to give up on the cornucopian dream of “an ever broader geographical scope for technological activities.” But that’s life, and it can’t be helped.

The irony here is that the “comprehensive technology” and “stabilized world” scenarios would not have been “contrary to the whole ethos of the industrial age” at all, in the grand scheme of things. Much as the New Deal salvaged and rehabilitated capitalism by reining in its excesses (and thereby preserved the existing social order), the goal of these techno-economic reforms was the adaptation and survival of technological civilization, with many or most of its perks and conveniences intact. By kicking the can down the road for 40 years, we have greatly magnified the risk that humanity will ultimately become “completely disillusioned about technological civilization,” and “the industrial way of life” will be lost.

It takes a finely-calibrated sense of taste to know when to be techno-optimistic, and when to be techno-pessimistic. The best technological prescriptions are syncretic, freely mixing high and low technology, as well as acceptance and rejection of conventional wisdom. The best example of this in the book is the passage from conventionally-bred, non-native, annual monocultures to genetically-engineered, native, perennial polycultures. The idea is that the agroecosystems with the lowest input costs and environmental impacts are the multistory perennial polycultures most closely resembling the forest and prairie ecosystems that exist in the absence of human intervention. Genetic engineering makes it possible to domesticate wild plants de novo (without the linkage drags that would otherwise cause many of their desirable traits to be unintentionally bred out) and build “multifunctional” agroecosystems that provide not only the usual provision services, but also substantial ecosystem services. These perennials are high-yielding and mechanically-harvestable, but retain all of their natural pest resistance traits, among other things.

While decarbonization is probably the most important single departure from business as usual, global warming and ocean acidification are but two of the nine planetary boundaries. The use of land, water, and raw materials must never be forgotten. There may be a menu of “suitable new political forms and procedures” to choose from, but they will all involve high state capacity as a tactical necessity of resolving large-scale coordination problems. The political solutions will come largely from John Roemer’s “Kantian equilibrium,” and perhaps also Georgism. It may be too late for the “comprehensive technology” and “stabilized world” scenarios, but it is never too late for the underlying political and technological innovations (and exnovations). In this way, the book is just as much a vision of our future as it is a vision of a past that never was. It is a shamelessly, transparently deluded and escapist retro-futuristic techno-utopian fantasy, but I swear to God that at the end of the day, all of that is just a means to an end — helping to create the ideal conditions for an Enlightenment to actually occur for the first time in history.

The Techno-Economic Setting

Almost no science fiction deserves to be called “science fiction,” or even “science fantasy.” It’s all imagination and no straitjacket, almost never addressing practical problems in a detail-oriented, multi-disciplinary, and physics-based way, making it little more than a style exercise. And it’s not just that it’s useless, it’s that it has a completely misplaced sense of what is futuristic. The world we live in is not modern at all, and as such, there is a vast and almost entirely unexplored overlap between the futuristic and the mundane.

I hope you’ll believe me when I say that my motivation for doing all of this technology-oriented worldbuilding is not to wallow in esoterica for its own sake, show off something I (rightly or wrongly) believe to clever, or express some kind of uncritical lust for technology. It has to do with my debilitating affinity for what I perceive as technological beauty, elegance, and perfection. I think of myself as tending to a garden of pure Forms in the Platonic ideal world, where the perfect all-in-one washer-dryer and the perfect district heating and cooling system are on display, next to the regular dodecahedron and the number 137, all on plinths, and with informational placards.

It’s very important to note that although a lot of these machines are weapons, or weapon-adjacent, they’re really just a way of articulating a certain philosophy, and approach to problem solving. I would contend that weapons are some of the most telling cultural artifacts produced by any technological civilization. One of the themes in the book is that, in a world of ubiquitous illiteracy, tribalistic one-upmanship is the only motivation for technological and human development comprehensible to ordinary people. It might be for the wrong reasons, but it is very much the right thing, so I can’t complain. By no means is this all just about weapons — there’s plenty of other stuff like a deep space mission to land cosmonauts on Callisto and robotically search for the hypothetical subsurface ocean on Europa, vintage clothing made from NaOH- and NaClO₂-treated Calotropis fruit fiber, towering airlift bioreactors for the submerged fermentation and biopulping of wood chips by domesticated Ceriporiopsis subvermispora, affordable thermochemical and electrochemical routes to titanium, heart-healthy vegetable shortening fractionated from the “high-stearic-high-oleic” oil of transgenic edible Jatropha curcas, transgenic Parthenium argentatum that secretes PHB granules in its “bark parenchyma cells,” the effort (at the intersection of the Buffalo Commons and Jurassic Park) to replenish stocks of bison using interspecies embryo transfer, manipulative wildlife management, and DNA from the archeological record for genetic rescue… And Karen Carpenter is still alive.

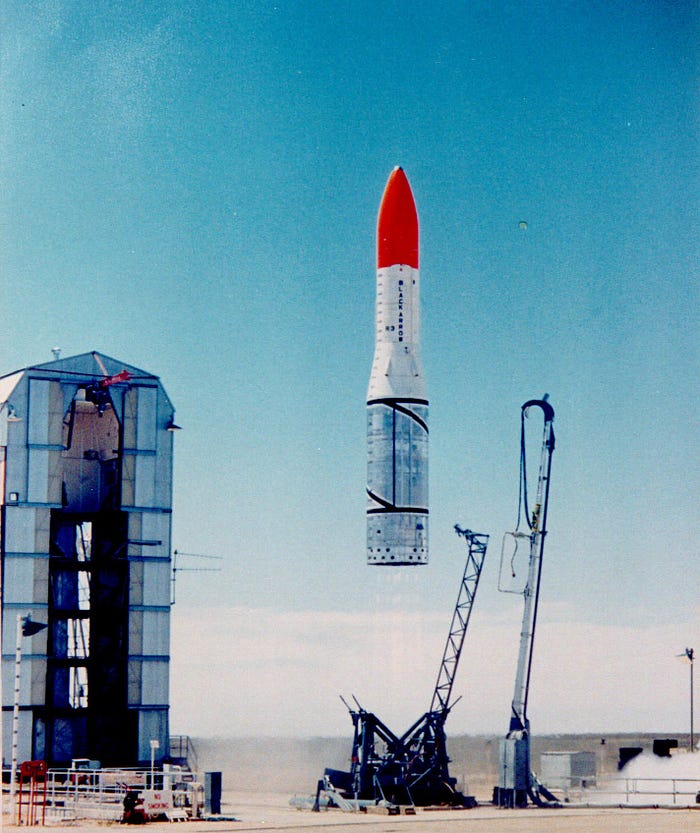

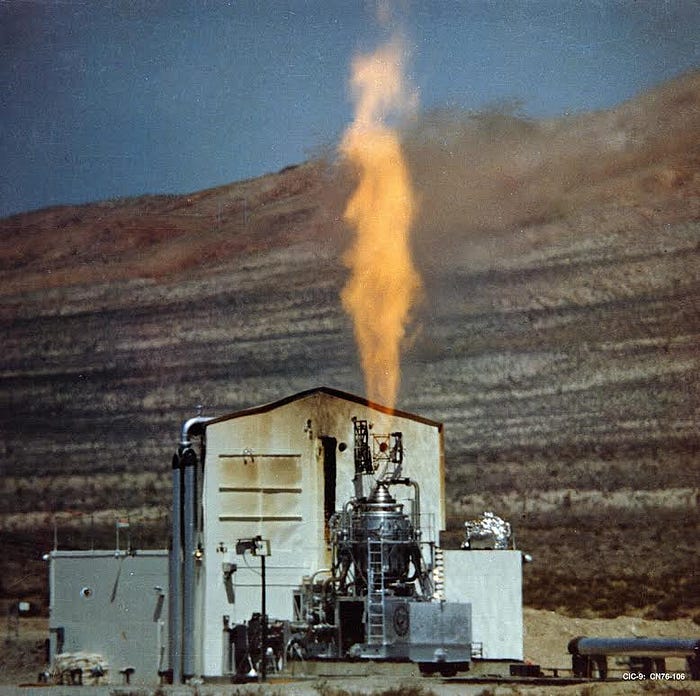

#1: EG&G Asterius. The stadium-sized cryogenic vacuum blast chamber for laser-triggered bomb-pumped laser-driven nuclear micro-bomb physics experiments that make inertial-confinement fusion ignition as easy as killing ants with a magnifying glass.

#2: General Electric Mark 5 × Aerojet UGM-96A. An almost solid cylinder of unstoppable mass murder. It may be ten pounds of SLBM in a five-pound missile tube, but it’s surprisingly easy to live with over the 15-year maintenance interval (truly the Honda NSX of nuclear weapon systems).

#3: Xerox Paperless Desktop. This stupidly overpowered home computer pushes silicon, static CMOS and DRAM to their absolute limits, with Cu-Cu thermocompression bonding of a general-purpose triggered-instruction tile processor and eight DRAM chips into a back-thinned chip stack MCM, connected through a Foturan glass printed circuit board to an electron-beam-addressed phase-change RAM (EBAPCRAM) storage tube. The hermetic encapsulation of the electrical components in borosilicate glass by chemical vapor deposition at intermediate temperatures allows two-phase immersion cooling in automotive antifreeze, with a high critical heat flux.

#4: Aerojet-Babcock & Wilcox M-1. This is the nuclear thermal rocket engine with 1,084 seconds of specific impulse that helped land American cosmonauts on Mars in 1969 and return them safely to the Earth in 1971 (Hugh Dryden “preferred ‘cosmonaut’ on the grounds that flights would occur in and to the broader cosmos, while ‘astronaut’ suggested flight specifically to the stars”). The Stationary Liquid Fuel Fast Reactor is “suspended within the expansion-deflection nozzle bell,” and “gamma shield material (tungsten) can be integrated into a high-temperature nozzle throat component, because the nozzle’s radial outflow sonic throat is located forward of the reactor” and “the reactor’s location is remote from the majority of supersonic gas that expands off the nozzle wall, having been directed there by the annular throat.” “It is implicit in [the] design concept that the tungsten nozzle… weight be accounted as nearly zero, because the gamma shielding provided by [this] forward-mounted component is necessary to shield controls, components, propellant, and crew.” This takes thousands of pounds out of the engine, greatly increases the TWR, and even “has a small specific impulse advantage, because [the] high surface area, gamma heated tungsten nozzle heats reactor outlet gases slightly, whereas nuclear thermal rocket engines of conventional design lose about five seconds of specific impulse by cooling nozzle gases with a cryogenically cooled throat.” The hydrogen propellant flows forward through tungsten alloy microtubes that are 98% isotopically enriched in ¹⁸⁴W in order to reduce thermal and epithermal neutron absorption, alloyed with 5at% rhenium for reasonable low-temperature ductility, and immersed in (and wetted by) the molten ²³³U-rich alloy fuel. The ²³³U has less than a third of the critical mass of ²³⁵U (as well as a slightly higher thermal conductivity), and is bred from ²³²Th in a two-fluid molten salt reactor with a hydrous ⁷LiOD-NaOD fuel salt.

“Molten uranium has several advantages over solid ceramic fuels such as UO₂, UC, and UN. The relatively high thermal conductivity of uranium plus convective flow of the liquid would reduce thermal gradients and thus result in a nearly isothermal heat source. Also, because the uranium density is higher than in solid ceramic fuels, smaller and lighter reactors are possible for space vehicles. In addition, fuel element swelling, a major problem with solid fuels, would be eliminated by using a molten fuel… Metals generally exhibit much better thermal conductivity and thermal shock resistance than ceramics. In addition, the fabricability… would be much better with a metal than with a ceramic or ceramic-coated metal… no eutectic or chemical compound forms between tungsten and uranium… tungsten and uranium exhibit low mutual solubilities at elevated temperatures… After uranium solidifies at 1130°C, its density increases… over 5% during cooling to room temperature because of thermal contraction and allotropic transformations… Tungsten, on the other hand, exhibits a relatively minor thermal contraction when cooled from 1130°C. Thus, the shrinking uranium can cause stresses in the capsule walls which, if the capsule-uranium bond is strong enough, can be relieved by forming cracks in the walls… if liquid uranium fuel elements were used in a nuclear reactor… shutdown or cyclic operation at temperatures below the melting point of uranium could result in container cracks and in subsequent fuel losses.”

“The… liquid metal fuel reactor… could prove useful for space power generation since it could be operated as a relatively compact fast reactor… One of the most advantageous features of a fluid fuel reactor is its inherent safety and ease of control. A liquid fuel which expands on heating results in a negative temperature coefficient of reactivity. Since the rate of expansion is limited only by the speed of sound in the liquid, this effect is essentially instantaneous and tends to make the reactor self-regulating.”

The “high actinide concentration in combination with a… high heat transfer capability… leads to a high power density” and small “core volume… vessel diameter and shield diameter,” and therefore a relatively light shadow shield. “Refractory metal structural integrity may result in improved fission fragment retention,” and the “rugged construction may offer improved shock loading.” The large, prompt, negative temperature coefficient of reactivity “due to the thermal expansion of liquid fuel” not only stabilizes the power level globally without any “rapid control system,” but also stabilizes it locally, providing “inherent hot spot compensation.” The “fuel-to-coolant thermal resistance” is low, both because the fuel and “fuel container” are electronically conductive, and because tungsten, uranium, and hydrogen are chemically compatible up to 3500K, obviating any protective coatings and the thermal contact resistances (phononic band structure mismatches) that they introduce. This avoids “very steep temperature gradients, which in turn yield undesirable high fuel and low coolant temperatures,” and increases Iₛₚ. It is very important that the outlet of the reactor be isothermal, with the same “thermal margin” to the “maximum use temperature” of the tungsten alloy everywhere, in order to minimize thermal stress in the floating tubesheet, and maximize the “mixed mean reactor outlet gas temperature.” In other words, it is important for “radial power shaping” to “allow for significantly more thermal margin… resulting in significantly hotter coolant outlet temperatures,” and in turn, higher Iₛₚ. The hope is that the thermal margins will be no more than 200K and as little as 100K almost uniformly across the outlet, resulting in mixed mean outlet temperatures of 3300K or even 3400K, comparable to those of reactors with advanced tricarbide fuels.

“At a high level, power shaping is useful for reducing the peak fuel temperature for the same overall core power. In the case of [nuclear thermal propulsion] this would be used to either increase the core power and thus the rocket performance, or the reactor size could be made smaller, improving the [TWR]. Power shaping is also useful for reducing differences in the coolant outlet temperature which allows for a simpler and potentially lighter manifold or orifice… since the outlet coolant temperature of 2700K is only 100K lower than the fuel temperature limit of 2800K, even a small local power excursion at the bottom of the core can exceed thermal limits. Therefore, shifting power to higher in the core can increase the thermal margin.”

“High-temperature, long-lived, fast-spectrum, fully-enriched reactors are of interest for power generation in space. The small size of these systems generally necessitates using an external control system which is based on leakage, and/or absorptions of neutrons… Reactor designers have used power flattening, i.e., uniform power generation per unit volume of core, in order to decrease the ratios of peak-to-average core temperature and fuel burnup.”

This is much like solid-liquid “interface location and morphology control” in the growth of bulk single crystals from the melt, where the “interface shape is determined by the melting-point isotherm which… is set by heat transfer,” and “the heater’s thermal geometry must be specifically tailored… to achieve the desired planar isotherms.” The “thermal gradients caused by surface cooling of a material with internal heat generation” (in particular those “temperature gradients in the radial direction that are generated by heat losses”) may be flattened by strategically placed thermal insulation and neutron reflectors that modulate the losses of heat and neutrons “to the surrounding environment,” as well as a “modest departure” from the “simple geometry” of the fuel container. Most importantly, the M-1 is designed for “long operating life with multiple restarts and temperature cycling.” Fast reactors lend themselves to this, as the neutron economy is largely unaffected by the gradual transformation of the binary eutectic fuel into a witches’ brew of innumerable actinides and fission products.

“Although… binary eutectics have not yet been considered as liquid metal fissionable mixtures for nuclear power plants, they are… characterized by a very high actinide concentration, which optimizes the neutron economy and thus the transmutation capability of the reactor. Their melting point is 800°C, which qualifies them for operation. The boiling points are well above 2000°C, so that the operating temperature can also be increased… The high thermal conductivity makes continuous pumping of the fission fluid obsolete. Overall, this leads to an increase in power density and thus also to higher power plant efficiency… The concentration of fissile materials must be significantly increased for fast fission reactors… fission products could initially be completely dissolved in the alloy due to the low mass turnover of nuclear fission. However, the outstanding neutron economy… allows such long operating times without reprocessing of the fissionable mixture that… the concentration can increase to such an extent that agglomeration effects occur. In addition, the actinide nuclides also change due to sterile neutron capture and beta decay; in addition to new uranium and plutonium, protactinium, neptunium and americium are produced, for example. This results in mixtures that differ significantly in quality and quantity from binary eutectics. The mixtures with more than two components and in particular increased fission product concentration may deviate in the parameter range from the values for eutectics, but as long as the solidus temperature and the total viscosity are low enough for pumping, this does not affect the operability.”

The fuel container is “topologically similar to a once-through steam generator.” “The architecture of microtube heat exchangers is similar to conventional shell-and-tube exchangers but with very small tube diameters on the order of 1mm. Baffles are not typically needed in microtube heat exchangers because the small tube sizes and spacing can provide high enough heat transfer without creating crossflow. Microtube heat exchangers can accommodate a much higher number of tubes than conventional shell-and-tube heat exchangers.” The idea is that the microtubes have a ratio of surface area to volume comparable to that of a bed of “grain-sized spherical particles” with a “total outer diameter of 2mm,” but the fuel container does not partition the fuel axially, radially, or tangentially. The liquid fuel is a continuous phase, directly accessible through penetrations in the “fuel container,” with the advantages that three-dimensional mass flow occurs all the way up to the scale of the entire active region so that power shaping is maximized and mixing of the fuel “as contrasted to only localized irradiation of a solid fuel provides improved fuel utilization,” outgassing removes fission product gases more or less for free, the flow of cover gas into and out of the reactor can pressure-balance the fuel and propellant, the fuel can be foamed by gas bubble injection as it cools and thickens during engine shutdown so that “melt-freeze cycling… does not overstress the fuel tank,” and refueling in space (should the fuel container outlive the fuel) is conceivable because it “does not require dismantling or reconfiguring the core.” These features “eliminate the traditional concern of fuel cladding integrity due to irradiation and buildup of fission gas pressure, and thus… allow a very high burnup,” and may accommodate reductions in the thickness of the microtube walls, or even the rhenium content of the alloy, both of which would decrease the thermal resistance and increase Iₛₚ. The inner and outer reflectors of the “dual rotating windowed reflector” are both “capable of independently controlling the reactor through its full range from startup to shutdown throughout its life,” and the “manipulating of reflectors keeps the reactor compact and reduces the amount of core penetrations.” “Thaw is initiated within the reactor core by a programmed addition of reactivity through movement of the control reflectors. Reactivity additions are initially small so that core temperature increases are gradual to avoid severe temperature gradients and the possibility of overstress. Accommodation of volume expansion is provided by engineered voids or gas-filled bubbles emplaced within the reactor vessel.” “The entire system is designed for repeated freeze and thaw cycles, and rethaw/restart on orbit is not any different than the initial thaw and startup… The reactor is self-thawed by nuclear heating.” The fuel is mushy, “soft and wettable in character” over a large temperature range, “and can be extruded through and around intermittent solid barriers… taking advantage of the extrudability characteristic in avoiding the possibility of structural damage.” In the hot, flowing hydrogen environment, “tungsten has the lowest vaporization rate of all materials.” “The high operating temperatures are well above the brittle-ductile region of refractory metals,” and damage “caused by the high neutron flux as well as thermal stress will be automatically healed at those high temperatures (annealing in metals),” as “the ability of displaced atoms to return to a vacancy site or other relatively innocuous location is enhanced.” The hope is that, as the reactor is broken in and aged, “thermal stresses induced by the thermal gradients will beneficially relax with time due to creep” (this might actually be done on the ground, in a non-nuclear “high-fidelity thermal simulator”), and enough decay heat will build up that the engine “does not fully cool between major burns” and the fuel “can easily be maintained in a molten state.” The high Iₛₚ, high TWR, and long service life of the M-1 allow the General Dynamics Space Tug (with its lightweight, “zero boil-off” stainless steel balloon tank) to complete “advanced space missions” throughout the Solar System, and then boost back to propellant depots to be reloaded with propellant and reused. This reduces the rate at which these workhorse orbital maneuvering vehicles are expended and replaced at any given level of Space Transportation System activity. The M-1 is historically significant in and of itself, but even more significant as the design base for later space, marine, and terrestrial systems that couple the ultra-high-temperature reactor to a Rankine cycle power conversion system with a magnetohydrodynamic generator in which the electrical conductivity of the “100% enriched ⁸⁷Rb” working fluid is enhanced by neutron activation and “non-equilibrium ionization by ⁸⁸Rb decay products,” without “the need for an ionizing seed” or electron-beam irradiation (note that this is only possible if the reactor is internally-cooled, and the working fluid flows through the active region). This includes the Westinghouse-Babcock & Wilcox R-1 magnetoplasmadynamic thruster, and the fleet of large, commercial, ‘cosmoderivative’ B-4000 power plants (with nameplate capacities of about 4GWₜ and 3GWₑ) based thereon. The B-4000 is a ²³⁸U converter with an exceptionally hard neutron spectrum, ⁸⁷Rb Rankine MHD topping cycle, and one of two bottoming cycles: a thermomechanical Brayton cycle inert gas turbine, or a thermochemical sulfur-iodine water-splitting cycle with SiC bayonet reactors that eliminate “the need for high-temperature connections, seals and gaskets and the associated leaks and corrosion problems” (the S-I cycle is the foundation of the hydrogen and ammonia economies). The high-pressure turbine blades are TZM, which “is almost insensitive to [the] helium environment up to 1000°C,” and “exhibits a completely different creep-resistance behaviour as compared to the nickel-base alloys, mainly because of its high melting point,” such that “almost flat creep curves make a 100,000-hour lifetime appear possible with an uncooled blade,” although the working fluid is probably an He-Xe mixture, with a higher density that significantly decreases the size and capital cost of the turbomachinery, but without significantly increasing the size and capital cost of the heat exchangers. The topping cycle could also reject heat to a calciner at 1000°C (in which case the concentrated CO₂ waste stream is reacted with “calcium-rich saline evaporitic brine” from salt wells to produce “saleable construction aggregate… gypsum, halite, sylvite, mirabilite, and hydrochloric acid”), or even a glass production plant at 1300°C (a temperature on the ragged edge of what is possible in molten salt reactors). The reactor has an outlet temperature of at least 2500°C, and so the combined cycle has preposterously high thermal efficiency even if it rejects heat to air.

While the operating temperatures of the reactor are limited ultimately by the structural materials holding the reactor together, and not by the temperature of the fuel itself, the upper limits can be increased now considerably above the 400-500°C range of most fission reactors, up to the point almost of vaporization of the liquid uranium fuel. This occurs at atmospheric pressures in the approximate range of 3500°C, but even higher at super-atmospheric pressures. Thus the efficiency of power generation can be increased from 30-35% to 50-65% at the 1000-2500°C range and up to 75% at temperatures approaching 3500°C.

In those plants that are water-cooled, the mass of cooling water consumed per unit of energy produced is very low (and the waste heat is almost always utilized by “an industrial process or district heating system,” rather than discharged directly into the environment). The generation of process heat at ultra-high temperatures also results in very low consumption of uranium and production of “fission product wastes” per unit of generated electrical (or chemical) energy. These outlet temperatures and thermal efficiencies are only possible with molten metal fuels, which might be said to have a generally higher degree of physio-chemical compatibility with metallic structures than molten salts. It is a myth that molten metal fuels in general have high vapor pressures, and preclude operation at both high temperatures and low pressures; uranium boils “at atmospheric pressures in the approximate range of 3500°C, but even higher at super-atmospheric pressures,” for example 5000K at 10–20atm. It’s interesting how propulsion systems tend to be very good design bases for stationary power plants in that, because they are subject to severe weight and volume constraints, stationary power plants derived from them generally have small footprints, and low capital costs (which largely determine the levelized costs of electricity). This can be seen in the compelling business case for aeroderivative gas turbines in peaking power plants, and in how the real-world Aircraft Reactor Experiment (“the first reactor to use circulating molten salt fuel”) paved the way for “compact” molten salt reactors with high outlet temperatures and thermal power densities, and commercial prospects good enough to merit serious consideration of a nuclear renaissance. It’s hard to make a direct comparison because the duty cycles couldn’t be more different, but it’s not outrageous to think that a nuclear thermal rocket engine, designed for a power density rivaling that of chemical rocket engines and years of unattended operation in deep space where it cannot be serviced, could be the starting point for a uniquely small and low-maintenance terrestrial power plant. What I found surprising is that, although nuclear thermal rocket engines are almost certainly the nuclear propulsion systems most sensitive to weight, volume, and complexity, fast reactors seem to have the upper hand over thermal reactors in this application, and the implications for the design of stationary power plants run counter to conventional wisdom. “The advantage of thermal spectrum reactors is that criticality can be achieved with less fissile material in the core. In turn, the advantages of fast reactors are that no moderator is necessary, thereby allowing more space for fuel…” “Fast spectrum reactors… tend to be much more compact than thermal spectrum systems, although that does not automatically translate to lower mass systems with higher thrust-to-weight. The inherently higher fissile mass of a fast spectrum system is an important disadvantage.” It seems that you can get quite a lot for the price of a large fissile inventory and “core non-fuel volume,” especially with a metallic “concentrated (undiluted) fuel fluid.” Indeed, the core of LAMPRE (a proto-SLFFR with plutonium-rich alloy fuel, a tantalum vessel, and sodium coolant) was cylinder with a 6.25" outer diameter and 6.5" height, and the business case for the SLFFR is that the simple geometry of the “fuel container and minimal reactivity control requirements will result in a very simple reactor core system,” operation “at a high power density and temperature… would lead to a compact core system… the high operating temperature would yield a high thermal efficiency,” and the combination “of these characteristics… reduce the capital cost per unit energy produced drastically.” The insensitivity of the neutron economy to fission products and refractory metal structures in the active region permits “an internally cooled LMFR to reduce fuel inventory,” with a stationary (rather than circulating) fuel that “remains in the core except for small amounts which are withdrawn for reprocessing” by relatively simple “co-located pyroprocessing” using “equipment that better approximates a continuous flow process for the purpose of aiding mechanical automation.” “Economic considerations limiting the inactive fissionable material inventory, and requiring maximum utilization of the active inventory result in very small cores, high heat fluxes, and the impossibility of a circulation system. For these reasons, the passage of the coolant through the core rather than the use of an external exchanger seems mandatory for the fast power reactors.” If, for example, someone throws a toaster into a bathtub in No Frills, they may very well be using a service of the Army Corps of Engineers, and a spin-off of NASA interplanetary space propulsion research. Large, commercial nuclear power plants are no less “Army civil works” than locks and dams, as the ACE is uniquely well-positioned to both deliver mains electricity to “homes and businesses through the electrical grid,” and help carry out the nuclear deterrence and non-proliferation missions, as well as promote “power system resilience” and the conservation of energy resources, all of which contribute to Comprehensive National Power. In the alternate-history EIA Annual Energy Review Sankey diagram, you will see that nuclear energy and fish-friendly, run-of-the-river hydropower from the ACE meet most of the demand for energy in the United States, and the balance is provided by hot dry rock geothermal energy (often in the form of “combined heat, power and metals production”).

#5: 1927 Ford Model A × 1953 Ford Mystère. These four-door sedans embody the aversion of two or three environmental disasters (though certainly not all of the environmental disasters wrought by road transport). The futuristic Model A embraces the arc-welded steel semi-monocoque of Joseph Ledwinka and the aerodynamic design of Paul Jaray. In order to minimize the height and frontal area, it has a rear-mounted “transverse engine and transaxle,” without any exhaust pipes or driveshafts (or “conventional frame rails”) “running back from the front of the car” that “would need extra space to fit between the underbody and the road.” The radiator ingests air “through inlets along the rear fender surfaces” at surprisingly high “ram airflow levels,” removing “some of the low energy boundary layer from the quarter panels, delaying body-side airflow separation and reducing base drag,” as well as exhausting “cooling air out the back” to “help efficiency by filling the wake.” The Model A also has “a full-length flat undertray,” and “inset extra glass panels” to “achieve a curved airflow around the A-pillar.” The use of long and continuous seam welds, rather than spot welds, reduces structural weight. “Closed-section structure is advantageous for increasing the strength and reducing the weight of car bodies, but… resistance spot welding that needs access both from outside and inside is not applicable. When resistance spot welding must be applied to closed-section parts, working holes are required, which, in a great number, spoil the structural rigidity of the work. As a solution, arc welding is sometimes opted for because of the workability from one side only.” The narrow, large-diameter nylon-belted radial tires have a small frontal area and very low rolling resistance. The idea is that, because the engine power required to overcome aerodynamic drag and rolling resistance is relatively low to begin with, and tight integration of the radiator with the base of the vehicle alleviates base drag, accommodation of the “additional cooling required for Stirling engines… by enlarging… the existing cooling water radiators” does not cause unacceptable ‘drag compounding.’ As such, the Model A has supremely low emissions of “engine noise, tire/road noise and wind noise.” The Vulcan four-cycle double-acting Stirling engine is ready for prime time, with a variable-angle wobble plate for simple “variable-stroke power control,” “a pressurized ‘crankcase’ with a… rotating shaft seal containing the working fluid and making it possible to avoid the reciprocating rod sealing problem,” self-lubricating powder metallurgy composite “cylinder wall sleeves” for “hot rings” that eliminate “appendix gaps” and the associated losses (the hot extrusion of the sleeves “both consolidates and shapes” so that “near net shape and finish can be achieved” in a single process step, “dramatically reducing the manufacturing costs compared to plasma spray deposition”), and “castable, low-cost, iron-based superalloy” “individual stacked heater heads” with a “relatively short warm-up time.” “A controller system constantly monitors the engine coolant temperature and adjusts the fan pitch in order to provide adequate cooling airflow, which is dependent on the actual ambient temperature of the load. This will reduce the fan parasitic load (power draw) and hence fuel consumption. Reversing the blades will help clean the radiator fins of any dirt or debris lodged in the fins.” “Forced draft units require slightly less horsepower since the fans are moving a lower volume of air at the inlet than they would at the outlet.” This external-combustion engine can run on inexpensive coal-derived carbon black with “nearly flat torque and efficiency curves” (“the efficiency falls to no less than” 3% “below peak over approximately 70% of the total load range,” and the “advantageous torque characteristics at low engine speeds” permit “transmissions with fewer gear steps than a corresponding diesel engine”) and no muffler. Weirdly enough, this goes back to the World War I naval blockade of Imperial Germany. In order to meet my alternate-historical objectives while also maintaining adequate suspension of disbelief, I need them to still lose World War I, but do it in a very precise way that sets up everything afterwards, and especially World War II, which is so violent, apocalyptic and trippy that only the United States and Nationalist China (Republic of China, 1912 to the present) survive to fill the international power vacuum, after the complete and total annihilation of the Soviet Union, France, the United Kingdom, Imperial Japan, and Nazi Germany. The idea is that, being wracked by severe shortages of raw materials, Imperial Germany made enormous strides in the utilization of natural resources — ranging from the selective sulfidation of nickel oxides in low-grade laterite ores from the Balkans, to non-abrasive inorganic hydraulic fracturing fluids that “penetrate deeply into the formation through complex fracture networks, forming in situ proppants to allow entire induced fractures to contribute to oil and gas production” in the Posidonia Shale — then unconditionally surrendered, transferred this body of “scientific and technical know-how as intellectual reparations” to the Entente Cordiale, and inadvertently laid the foundations of not only commercial geothermal energy, but also the cornucopia of tightly-coupled geothermal, geochemical, and geomechanical systems that would come to be known as ‘geotechnology.’ This had an immense impact on the 20th century thereafter, by opening up innumerable opportunities for the “extraction and production of raw materials” across the world, and considerably reducing the “volume of world trade,” the strategic interdependence of the international community, and the degree to which any political objectives could be met by intervening in the “extraction and production of raw materials.” By 1918, researchers had turned the problem of “carbon deposition and metal dusting” on its head (a Cinderella effect), and mastered the continuous precipitation of carbon black as syngas cools in a fluidized bed of solid catalyst particles. This made a largely closed thermochemical loop of underground coal gasification and above-ground “reverse gasification” possible by regenerating much of the injected water (this might be important in dry coal seams where the supply of makeup water from maintenance of the “gasification chamber below hydrostatic pressure in the surrounding aquifer to ensure that all groundwater flow in the area is directed inward” is inadequate). This occurred against the background of cutting-edge research at the Kaiser Wilhelm Institute for Coal Research, the Westfälische Berggewerkschaftskasse, Siemens, and the broader “network of research organizations” in “academia, industry and government laboratories.” I still have a lot of work to do, but right now I’m thinking that this involved the advent of “swept-frequency explosive seismic sources” that provide the copious 200Hz power necessary to resolve coal seams that are both deep and thin (echoing the real-world Vines Branch experiment of 1921), high-linearity molecular electronic transducer seismometers (“a closer relative to the old wet cells that used to operate doorbells than to anything now used in the electric-electronic field”) “assembled and processed… with simple robotic devices” and placed downhole below the “weathered-altered layer interface,” high-vacuum hot-cathode “electron beam recorders” that combine two emerging technologies of the fin de siècle (film photography and cathode ray tubes) to “produce very high resolution recordings on a virtually grainless recording medium” (similar to that in Lippmann holography) with the 100dB dynamic range and very high “density contrast in variable-density registration” necessary for “data to be recorded faithfully without using any automatic gain control” (the sheet beam current is modulated by a high-dynamic-range “electrostatic quadra-deflector” and the film transport mechanism has a “closed-loop, phase-locked servo-control system”), analog optical computers for seismic data processing using low-pressure mercury-vapor short-arc lamps (with water-cooled anodes) powerful enough to overcome the losses incurred “by changing the magnification of the Köhler illumination system to reduce the effective source size” and enhance the coherence of highly monochromatic line emissions (this allows deterministic deconvolution of the returns with a reference signal from a geophone near the seismic source, not entirely unlike the inverse of convolution by a noise wheel), “telemetry drill pipe” with high-reliability vacuum tube “repeater amplifiers” based on those in submarine communications cables, high-temperature downhole molecular electronic transducer inclinometers and even azimuth sensors with molten LiI electrolytes, downhole mud motors, rotary steerable systems, new cements and low-nickel steels for service in “thermo-chemically hostile” environments, and the “removal of sulfur, chlorine, nitrogen and mercury compounds from syngas at elevated temperature and pressures.” This cluster of technologies made it possible to exploit the most abundant fossil fuel reserves of all — coal seams too deep or thin to mine — and precipitated the ‘Black Revolution’ or ‘Coal Renaissance’ in the last months of Imperial Germany. This was far too late to influence the outcome of the war, but the seeds of the Black Revolution were soon planted across the world. The limits of underground coal gasification were soon pushed so far that ultra-deep coal seams bearing large quantities of geothermal heat were brought up to temperature with relatively small injections of oxygen. The significantly lower lifecycle costs of solid-fuel Stirling engine vehicles made carbon black the de facto standard motor fuel by the end of the Roaring Twenties. In addition, pump gasoline was blended with inexpensive “BTEX and aromatic amines” from underground coal thermal treatment (a sort of in situ mild gasification used to extract any valuable “phenolic and aromatic compounds” from the coal seam in preparation for UCG), and inexpensive “semi-cellulosic” ethanol from the consolidated bioprocessing of shredded whole Agave plants (with “low water consumption, high productivity on marginal land unsuitable for food crops, and low recalcitrance to conversion”) by a plant-pathogenic Fusarium oxysporum strain selectively bred for “sustained thermotolerance” in a continuous culture apparatus. This accidental three-pronged attack crowds and prices leaded gasoline out of the market for motor fuels, and averts the loss of some 184 million IQ points in the United States alone (the obsolescence of gasoline engines outside of aviation blunts the public health impact of blending toxic and carcinogenic aromatics into pump gasoline). With the coal ash left in place (underground and in situ) by the thermochemical regeneration of the carbon, and the all-important pre-combustion sulfur and mercury removal, the carbon black is, for all practical purposes, pure carbon. In addition, Stirling engine combustors with aggressive exhaust gas recirculation can easily achieve low PM and NOₓ emissions (without expensive platinum-group metal catalysts) because of their steady flow and low pressures. While the heavy-duty Stirling engines in “stationary power, railway locomotives, marine propulsion and the large off-highway vehicles used in mining, forestry and agriculture” certainly could burn an endless variety of torrefied organic wastes in fluidized bed combustors (as the “comminuting and scouring action of the fluidized beds” prevents fouling of the “heater tubes or heat pipe boiling sections” with ash), far more economic value can be created by using these wastes as substrates for the growth of thermophilic filamentous fungi in large-scale, low-pressure airlift bioreactors (to produce single-cell protein, secreted single-cell oil, and cellulosic fodder). As such, the Coal Renaissance is unavoidably carbon-positive in the short run (relative to the baseline Hydrocarbon Society), but by setting the stage for direct carbon and then direct ammonia fuel cells, it is nevertheless carbon-neutral in the long run, and in an ironic twist, winds up accelerating decarbonization. Hence the Mystère, the spiritual successor to the Model A with an aluminum alloy semi-monocoque joined by high-speed friction stir welding (using “self-reacting” contra-rotating pin tools with “scroll-type” shoulders), plus an alkaline direct carbon fuel cell as a drop-in replacement for the Stirling engine, compatible with existing “motor fuel storage and dispensing infrastructure,” but with double the tank-to-wheel efficiency. The sulfur- and ash-free carbon black fuel is “distributed pneumatically,” and dispersed in the electrolyte to form a “paste, slurry, or wetted aggregation of carbon particles” that is pumped into the anode chambers. The trick is to humidify the molten hydroxide salt — sparging it with water vapor, and maintaining a high partial pressure of water vapor in the cover gas — in order to disfavor the carbonation that kills the ionic conductivity at intermediate temperatures. This is not at all unlike the humidification of proton-exchange membranes. In NaOH electrolytes, carbonate “crystals are very much smaller and can easily be washed away” without plugging the pores of gas diffusion electrodes, and “impurities can not only be filtered out of the liquid electrolyte, but the whole electrolyte can easily be exchanged like the oil in the car.” The AFC lies in a Goldilocks zone, hot enough for the combustion reaction to proceed at reasonable rates without expensive PGM catalysts, but cool (and hydrous) enough that the chemical equilibrium disfavors both the corrosion of conventional steels or aluminum alloys by the molten salt (and the fuel cell can be constructed without expensive “ceramics or exotic alloys”) and the less-exothermic partial oxidation of carbon to CO. “Because of the alkaline chemistry, oxygen reduction reaction kinetics at the cathode are much more facile than in acidic cells.” The service temperature of about 450°C and reasonably good chemical kinetics help to meet the challenging size, weight and power requirements of the automotive application, and in many cases, the silver was obtained by recycling the cylinder wall sleeves of old Stirling engines (a solid lubricant taking on a second life as a catalyst). The power inverter of the Mystère is based on orientation-independent ignitron switches, rather than silicon IGBT switches. The OIIs are descended from the liquid metal plasma valves of the interwar period, which combined “the most desirable properties of classical liquid-metal arc devices with those of classical vacuum-arc devices,” and “spurred the rapid development of a broad range of basic power circuit topologies… including phase control, natural commutation, forced commutation, DC-to-AC inversion, cycloconversion, and many others.” “Regular ignitrons must be maintained precisely vertical, and are sensitive to movement (jolts, vibration, oscillation). Hence, for mobile applications such as locomotives, special construction features were added inside the ignitron, such as a splash screen, mercury retaining baffles, and a cathode-spot limiting/anchor ring.”

“Unlike the conventional mercury converter valve, the LMPV is a single-gap, single-anode device that utilizes the rapid recovery properties of high vacuum to give good voltage hold-off characteristics following current conduction in the vacuum arc mode. Since the ambient vapor density in the LMPV is much lower than in the multi-anode device, voltage hold-off between electrodes is determined by the dielectric properties of vacuum. Because there is then no need to subdivide the interelectrode gap to support the applied high voltage, no interelectrode grids are required and the valve size can be substantially reduced. Additionally, the LMPV has only one anode, rather than the six of a conventional valve… The condenser area can be kept relatively small since the amount of mercury emanating from the cathode is small, this is a result of the limited free surface of mercury in the cathode throat. The cathode is force-fed mercury in such quantities that only an amount of mercury necessary to provide short-term overcurrents is actually exposed to the discharge chamber. At an operating electron-to-atom emission ratio of 50 (much larger than that of a normal mercury arc discharge), the amount of mercury in the cathode throat is so small that it can be held in place by surface tension forces. The LMPV derives its orientation independence from this fact. Mercury ions and neutrals impinging on the anode are reflected to the condenser wall, which is also the vacuum envelope of the valve. Since the condenser temperature is kept below -35°C and since the sticking coefficient at that temperature (even for relatively fast mercury atoms) is close to unity, nearly all mercury particles are rapidly condensed. The ambient vapor pressure can thus be kept at a low level, with consequent improvement in the dielectric integrity of the valve… Appendage ion pumps, which have no moving parts, maintain a low internal pressure in the valve… The voltage drop across the plasma during the conduction phase is 20-30V (discharge voltage) and is almost independent of valve current. This voltage is considerably lower than that for either the conventional mercury or the solid-state valve, resulting in greatly reduced operating losses.” “Arc spots form on the inner and outer periphery of the cathode groove such that the arc power is distributed and maximum cooling results. Furthermore, the molybdenum becomes wetted by the mercury so that the arc spots are anchored at the [Hg-Mo] interface, thereby eliminating droplet ejection and insuring gravity independence… The use of this liquid metal cathode in which the arc spots are anchored and mercury evaporation is controlled eliminates a number of the main problems associated with the conventional use of mercury as a discharge cathode. For example, with a conventional device, such as an ignitron, which employs an extensive mercury pool, the evaporation of cathode material from the surface… greatly exceeds the efflux of mercury from the cathode spot itself. Furthermore, as the cathode spot moves erratically over the mercury surface, mercury droplets are ejected into the interelectrode space where they vaporize and cause a significant addition to the neutral vapor density. The resultant pressure combined with the Paschen and vacuum breakdown relationships result in relatively inferior high voltage and recovery properties for these devices in comparison to the LMPV… The cathode structure is constructed largely of molybdenum which resists arc erosion while also being easily wetted by mercury.”